Domain Discovery is a simple tool that leverages the API from xreverselabs.org to automatically find and store domains. This tool supports domain scraping in multiple iterations, with configurable delays between requests, and ensures that no duplicate results are stored during the scraping process.

- Automated Scraping: Automatically fetch domain data from the xreverselabs.org API.

- Random User-Agent: Uses

fake_useragentto simulate a real browser request with varied User-Agent headers. - Duplicate-Free Domains: Ensures no duplicate domains are saved between scraping requests.

- Colored Output: Uses the

coloramalibrary for colored terminal output, making it easier to read. - Save Results: Automatically saves the scraping results in a

result_discover.txtfile.

Before running this tool, make sure you have the following:

- Python 3.8 or higher

- An API key from xreverselabs.org (Use

FREE-TRIALfor a free version) - A few Python libraries that need to be installed (see the Installation section)

-

Clone this repository to your local directory:

git clone https://github.com/xReverseLabs/Domain-Discovery-python.git cd domain-discovery -

Install all the required dependencies using

pip:pip install -r requirements.txt

requirements.txt will include the following:

requests fake_useragent colorama -

Once all dependencies are installed, you are ready to run the tool!

-

Run the main script using the following command:

python Domain_Discovery.py

-

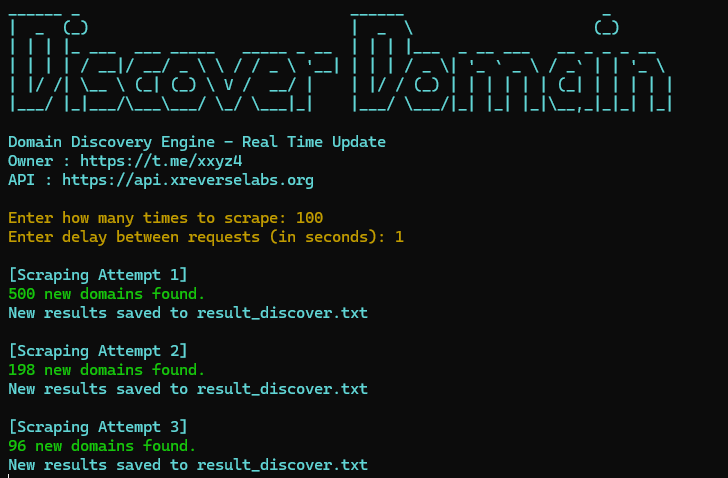

Upon running, you will be prompted to enter the number of iterations (how many times the scraping will be performed) and the delay in seconds between each request. For example:

Enter how many times to scrape: 10 Enter delay between requests (in seconds): 5 -

The scraping results will be printed in the terminal and saved in a

result_discover.txtfile. If a domain already exists from previous requests, the tool will avoid storing duplicates.

[Scraping Attempt 1]

15 new domains found.

New results saved to result_discover.txt

[Scraping Attempt 2]

No new domains found in this request.

[Scraping Attempt 3]

8 new domains found.

New results saved to result_discover.txt

The result_discover.txt file will look like this:

example.com

sample.net

domain.org

...

Contributions are welcome! If you would like to contribute, please fork this repository and submit a pull request with improvements or new features. You can also open an issue if you find any bugs or have feature ideas.

- Fork this repository

- Create a new feature branch (

git checkout -b feature/AmazingFeature) - Commit your changes (

git commit -m 'Add some AmazingFeature') - Push to the branch (

git push origin feature/AmazingFeature) - Open a Pull Request

This project is licensed under the MIT License. See the LICENSE file for more details.